Facebook Destroys Everything: Part 2

How Mark Zuckerberg's "pivot to video" made the world worse.

It was April 2016, and Mark Zuckerberg, clad in his usual incredibly expensive cotton t-shirt, told the world that his website - and thus, the entire Internet - was headed to a video-filled future, where live broadcasts and snappy, “snackable” content would push out the old, boring world of words.

Mark told the world that he knew this because he had the data: he knew for a fact that people were spending lots of time watching video, and simply couldn’t get enough of punchy video ads. Anxiety-filled media companies and publications, already wondering if video was the play of the future, scrambled to answer the call.

Just a few months later, Facebook admitted it had made yet another one of its signature, whimsical little oopsies. It had fucked up the math: it had overestimated video viewership metrics by, it said, about 80 percent. Or, possibly, by 900 percent. Somewhere in that ballpark.

But the evidence that Facebook lied came out too late. The lumbering executive minds of great lumbering companies had already been made up. Print reporters were laid off en masse, and many of those who survived were pressured to spend less time messing around with icky, unprofitable words, and more time on making fun little videos.

And like many millennials who had once dreamed of reporting careers, I watched the bloodbath and regretfully decided that I wasn’t going to bother with pursuing another full-time journalism job either.

Despite all the cuts and the reshuffling and the chaos, the profits that Mark Zuckerberg had promised for journalism never arrived, and remained a blue-shaded mirage on the far off horizon. In late 2019, Facebook coughed up $40 million to advertisers to settle a lawsuit they’d filed against the company, claiming (it seems, accurately) that Facebook had flagrantly lied to them about how much time users actually spent viewing video ads.

While the media industry eventually concluded the Pivot to Video had been a terrible mistake, the jobs that had been lost in the process never recovered. And Facebook, or Meta, or whatever the terrible thing is called, has soured on journalism too. It’s a far cry from the friendly overtures - hiding a handgun behind its back - that the company was making to the media less than a decade ago.

This summer, in a particularly petulant act, Meta announced that instead of adhering to a new Canada law that would require social media companies to share profits with publications, its sites would block all links to Canadian news sites instead. Threads, for its part, has rejected journalism entirely, in favor of content - ah, that hideous, bloodless word! - that Threads and Instagram lead Adam Mosseri has deemed more uplifting, more marketable.

Sum it all up, and you’re left with the conclusion that Facebook seduced the entire journalism industry with promises of riches and security, then turned around and shot it in the knees - not to kill it immediately, but to ensure that it’d bleed out slowly instead. And we’ve all been left to suffer with the results, in a world where fewer and fewer people can make any kind of meaningful living from finding the truth hidden within the great morass of disinformation that the Internet churns out, like guano from an island full of shouting, shitting seabirds.

Are you starting to detect a pattern here, a through-line, a single blue vein running like the shit-filled intestines of a shrimp through the last decade and a half of lies, conflict, corruption, and death? But I can’t address every stunningly ethics-free and immoral thing Meta has done. Not in one article.

I’d be working on it for years, or I’d eventually, after staring too long into one company’s seemingly inexhaustible reserve of unpunished and incredibly public crimes, go mad. I can only run through the violations and the failures as they come to mind, the ones that made the biggest impression on me.

For me, in my narrative, first there was Myanmar, and then came 2016 - that venom-filled year in which I realized that the evils that Facebook had unleashed on Myanmar were coming home. When I first started watching what was happening in Myanmar in 2013, many students of social media culture, like me, operated under the hopeful assumption that the country’s Facebook-enabled descent into hell could at least partially be chalked up to a lack of online literacy.

We reasoned that countries like the US had a solid 20 year head-start on being online over places like Myanmar, and that the general global public simply needed time, and perhaps some carefully-crafted public education, to get a better sense of what was real and what was dangerous bullshit on the Internet.

We were incredibly wrong.

It turned out that the evils enabled by Facebook, and by social media in general, were much more deeply rooted in the tar-filled recesses of the bad bits of the human mind than that. And as the shadowy creeps at Cambridge Analytica secretly sifted through my Facebook data and that of everyone else, I watched my algorithmically-barfed up feed with an ever-increasing sense of nausea. Realizing as I watched that it could happen here.

And it was.

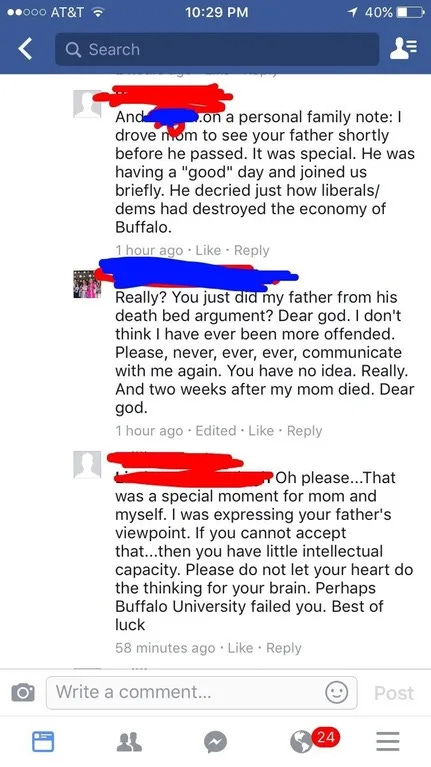

The second-cousins of people I’d vaguely known in high school accused my actual friends of being Soros-funded shills for global Jewish conspiracy. I watched as real-life friendships crumbled, families decided they’d never speak to each other again, and parents accused children of being blood-sucking, welfare-exploiting Communists.

I spent hours a day sucked into pointless, deranged political fights with people I’d never met before, as Facebook’s nasty little algorithm zeroed in on exactly what was most likely to put me over the edge into the Red Mist. The site always was terrible at figuring out which ads would appeal to me, but it did get pretty good at figuring out how to make me stroke-inducingly angry.

Eventually, I came to recognize that the site was twisting human relationships into dark and unrecognizable shapes, working to reform our conversations and our thoughts into patterns legible to marketers: transforming us into creatures easier to sell to, easier to keep locked up inside the confines of Facebook’s ecosystem. I knew all this and yet, as we got closer and closer to the election, I stayed on the repulsive thing, unable to resist watching the fighting, the weird digital-media enabled derangement that seemed to have spread to everyone on the Internet.

Then, Trump won.

Facebook lost its hold on me over that, repulsed me even more than it had before - as I realized that it had played a decisive role in helping something dark and disgusting in the human mind manifest into a new, far more dangerous, real-world form, and that, by adding my own voice to the collective scream that had come to define the site, I’d helped bring it all into being too. In early 2017, I mothballed my account, scrubbing all the data and removing all my friends.

Did you know that if you deactivate your account, Facebook will keep tracking your data, under the theory that you might come back someday? And did you know that even if you delete your account, even if you’ve never had one to begin with, Facebook will create a zombiefied shadow profile for you anyway - which might include sensitive health data that you’ve entered into your medical providers website? Were you aware that Facebook will, at best, take its sweet time to crack down on scammers who appropriate your name and your identity so they can better exploit your elderly relatives? (Or never deal them with at all). And what’s more, were you warned that you can’t delete a Threads account once you’ve made one without deleting your Instagram account as well, an issue that the company swears that it will fix eventually, one of these days/months/decades?

After I left Facebook, I turned my attention to Twitter, which was, while a cesspool, a cesspool I found much more suited to my particular slop-seeking tastes. Twitter’s developers had never figured out how to monetize user-data in the grim and shark-like way Facebook had, and Jack Dorsey largely appeared to be too busy gobbling up magic mushrooms and studying erotic yoga poses to make progress on the problem. The site was designed in such a way that I never found myself screaming at someone’s gibbering fascist uncle with a soul patch in darkest Missouri, and it was much easier for me to simply block and ignore the weird conservative wildlife that did, on occasion, stumble across my profile. And most importantly, Twitter never made me feel quite as debased, as repulsive, as angry as Facebook did.

When the Cambridge Analytica revelations came out in 2018, revealing that a political consulting company had been quietly exploiting user data that Facebook had failed miserably to protect, I felt both horrified and validated. And I was pleased to see that Facebook’s previously relatively-clean public image, already tarnished by how repulsive many people found the site in the lead-up to Trump’s election, was finally, finally beginning to take on serious damage.

Sure, tons of people still used Facebook, but signs of weakness were appearing, hints that younger, cooler people were beginning to back away from a website that seemed engineered to allow their weird Trump-loving great-uncles to yell at them. Indications that Gen Z kids increasingly regarded Facebook as a place they’d only use (maybe) to wish their grandparents a happy birthday, not a site where they’d ever want to actually hang out. But Instagram was still popular, and Facebook owned that, and WhatsApp was still globally pervasive, and Facebook owned that too. The same blue sheep-paddock, as Meta had correctly deduced, could be made to take on many forms.

Zuckerberg apologized for Cambridge Analytica, just like he did when his company was called out for abetting genocide in Myanmar. Zuckerberg went on another one of his Apology Tours in public, as the company (largely behind the scenes) rolled over and pissed at the feet of GOP politicians and MAGA emperor-makers, ceded to the ever-changing, deranged whims of Donald Trump. Zuckerberg even agreed to a photo-op with Trump in the White House, which the President saw fit to post first on Twitter.

And while people trusted Facebook a lot less than they used to in 2016, the site, and the company, still seemed horribly inevitable. People had fallen out of love with Facebook, but many of us were getting the uncomfortable feeling that soon, our personal feelings wouldn’t matter anymore. That Mark Zuckerberg’s company was building towards a future where getting a Facebook account would no longer be an actual consumer choice, but a price you’d be forced to pay just to get on the Internet, or to pay your taxes, or to set up a doctor’s appointment.

Exhibit A of this unsettling world-domination strategy? Libra, Facebook’s now-failed June 2019 universal cryptocurrency boondoggle that the company claimed would use the blockchain, or whatever, to help connect the world’s underbanked and digitally-isolated people with the global financial system. It was a financially-focused rebrand of Meta’s now flailing Internet.org strategy to get the entire world onto Facebook (and incidentally, the Internet), the same effort that had helped ensnare Myanmar. Regulators almost immediately responded with suspicion - to their credit - but the company continued for a while to doggedly press on.

Also connected to Libra, in terms of overall strategy, was Facebook’s new effort to map the entire world with imagery pulled from satellites and drones, using computer vision tools to suss out population figures for 22 different countries, followed-up with maps doing the same thing for the majority of the African continent. Facebook’s messaging around the project, much like Libra’s, emphasized the warm and cuddly impacts, focusing on how the data would be used to support charitable causes and humanitarian response efforts. Their releases discreetly ignored the profit motive behind why such a gigantic, publicly-traded company was pumping such vast sums of money and human resources into supposedly charitable projects.

For me, and a lot of other Facebook-cynical observers, that unspoken answer was obvious. They were doing all this to herd even more of the planet into their own walled garden, permitting the company to profit off ever more human data, of every more aspect of modern-day, digital life.

What Zuckerberg seemed to want was for the world to view his Facebook as more than just a tech company - as more like an inevitable, unstoppable natural phenomenon. The kind that moves fast and breaks things. And places. And people.

People like Facebook’s content moderators.

Contract employees paid only somewhat above minimum wage, employed by vendors with intentionally-bland names,employed in satellite offices around the world in locations as far away from Facebook’s actual, highly-compensated employees as possible. People who spend their entire day at work staring into the dark and rotting heart of humanity’s absolute worst impulses, clicking through scene after loathsome scene of screeching men slowly having their heads sawed off, kittens loaded into blenders, Holocaust deniers and mass-shooting victims. Human big-tech byproducts who are able to access a perfunctory amount of mental health support, but who are also achingly aware that they’ll be out on the street if they make a few mistakes in the course of viewing a tsunami of horror.

I have some small sense of what it is like to gaze long into the digital abyss, due to my reporting and research work around conflict and war crimes - but then again, I have no idea at all, because I willingly and knowingly chose to look at these things, was compensated fairly, received praise and platitudes for taking on the burden. In late 2020, American Facebook moderators settled with the company for $52 million, cash intended to compensate both current and former employees for the psychological damage they’d taken on in the line of duty: leaders also agreed to introduce content moderation tools that muted audio by default and swapped video over to black and white, small changes intended to make viewing evidence of a blood-soaked world more bearable.

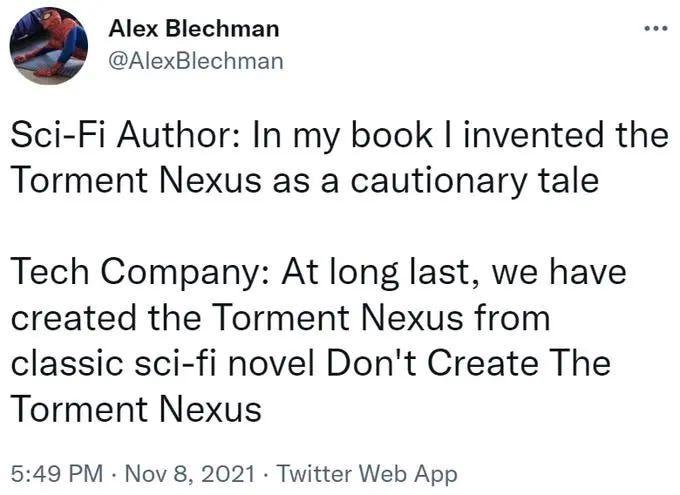

But of course the problem isn’t fixed. Of course, Facebook is Still Working On It. This summer, Facebook moderators in Kenya launched their own lawsuit mirroring that filed by their American counterparts, seeking $1.6 billion to compensate them for miserable working conditions, inept psychological counseling, and crippling psychological damage - and for lost jobs, as some moderators claim they were fired in retaliation for attempting to organize a union. On social media, we joke, in a way that’s not really joking, about how our tech overlords have created the Torment Nexus, about how we’re locked in a psychological hell we can’t escape.

Some of us much more than others.

More next time.

Here’s part one of this series, covering how Facebook abetted genocide in Myanmar: