Welcome to the Twitter Abyss

Elon Musk's depraved, utterly irresponsible failure to moderate Twitter is harming innocent people.

For almost as long as Twitter has existed, users have known that the place is a cesspool. A hellsite, albeit a hellsite that could be both informative and a lot of fun at certain times, and in certain contexts. A globally-relevant portal where genuinely useful information mingled with an endless stream of depressing news, shouting bigots, hardcore porn, and bot-driven gibberish.

But as it turns out, Twitter could get a whole lot worse.

Over the last few weeks, under Elon Musk’s King Dumbass oversight, Twitter appears to have given up on basic content moderation and filtering. Innocent users who thought they were protected are now being exposed to a regular stream of hardcore porn, gore, and animal abuse. A once semi-reputable website has, in just a month or so, turned into a bargain basement version of 8chan with even lamer humor.

I discovered this the hard way the other day.

I’ve barely touched Twitter over the last three weeks, engrossed as I am in flooding Bluesky with dumb jokes about nostalgic 1980s puppets. But on May 9th, after Tucker Carlson announced he was moving his show to Twitter, I dipped my toes back in the water. I was looking for funny reactions from people with horrible Tucker-loving older relatives who couldn’t figure out how to get onto Twitter.

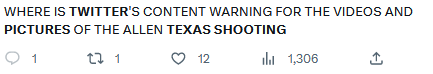

While I did find those Tweets, I also noticed something way more disturbing in Tucker’s mentions. Lots of uncensored, horrifyingly graphic pictures of the horrendous May 6th shooting in Allen, Texas, at a frequency that I was not at all used to seeing on Twitter.

I began to wonder if something was up.

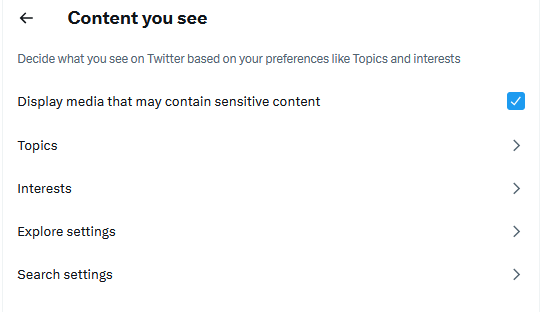

I turned autoplay on, which is Twitter’s default setting, and switched on the setting to filter sensitive content. (By the way, if you have an Apple device, you can only access these settings via the web, not in the app). I figured this configuration would closely approximate how many users interact with Twitter.

Then, I started searching for terms related to the shooting. Sure enough, the website served up the graphic images immediately, right amongst links to stories by real news outlets and emotional reactions from the public. In many cases, the content filter failed to catch them.

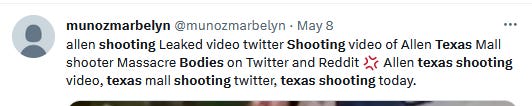

Some of the videos and pictures that weren’t caught by content filters appeared to be posted by keyword-farming bots, often complete with profile pictures of attractive young women. Apparently, Elon’s alleged war on bots hadn’t extended to the very worst kind of bot there is.

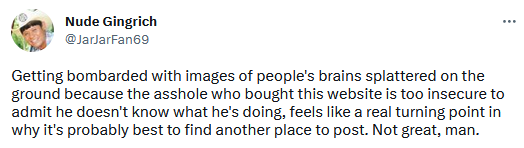

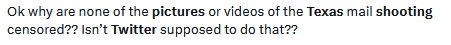

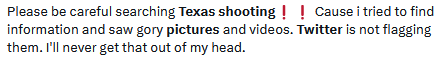

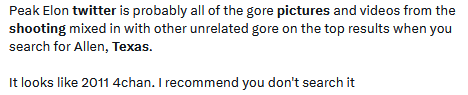

As I searched, I also noticed many comments from people who had, much to their horror, unexpectedly seen images from the shooting after they’d tried to search for news.

The situation was so bad that the New York Times ran a story on it, quoting a number of outraged users. Far as I know, Twitter still hasn’t officially responded in any way to the complaints.

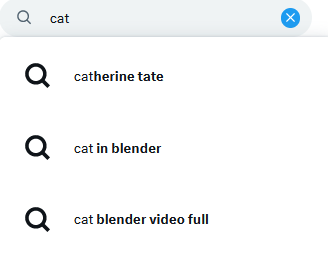

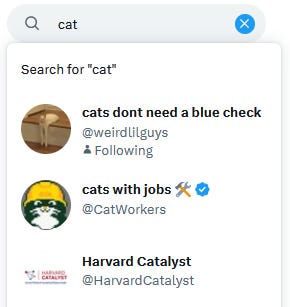

And then, the next evening, some of my mutals on Bluesky started noticing there was something horribly, horribly wrong with Twitter’s autosuggest function. One person I follow reported that while searching for the word “cat” - you know, cat videos, cute cat pictures, the very currency the entire Internet has run on for as long as I can remember - the site suggested this:

I rushed over to Twitter to try it out for myself.

And it was true: for just about every popular pet I could think of, Twitter was suggesting something mind-bendingly horrible.

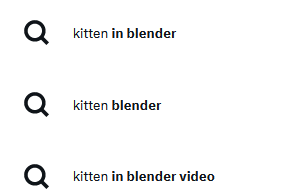

Kittens:

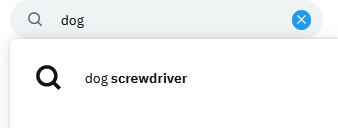

Dogs:

Hamsters:

You get the horrible picture.

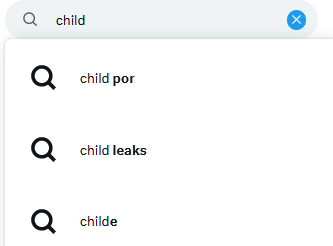

Then, I tried typing the word “child” into the search box. This is what I got.

Jesus Christ.

Twitter wasn’t just suggesting that people look at animal abuse: it was also, apparently, facilitating people who were searching for child sexual abuse material.

I immediately posted about this on Bluesky and Twitter, and so did NBC reporter Ben Collins, as well as a number of other people who’d picked up on this at about the same time. That evening, on May 10th, we could actually watch in real time as some poor Twitter employee attempted to fiddle with the settings to fix the problem. Eventually, after a few hours, they gave up. Twitter’s auto-suggest feature was turned off entirely.

As I write this, on May 12th, it’s still down.

We don’t know why this auto-suggest debacle happened, although we do have a pretty good theory. As former Twitter Trust and Safety head Yoel Roth said on Bluesky, and repeated to Ben Collins, he believes the company somehow dismantled an extensive set of existing safeguards, a process that used to combine both automatic and human moderation. (He remembered cross-referencing 10,000 lines of the old blocklist with terms in Urban Dictionary).

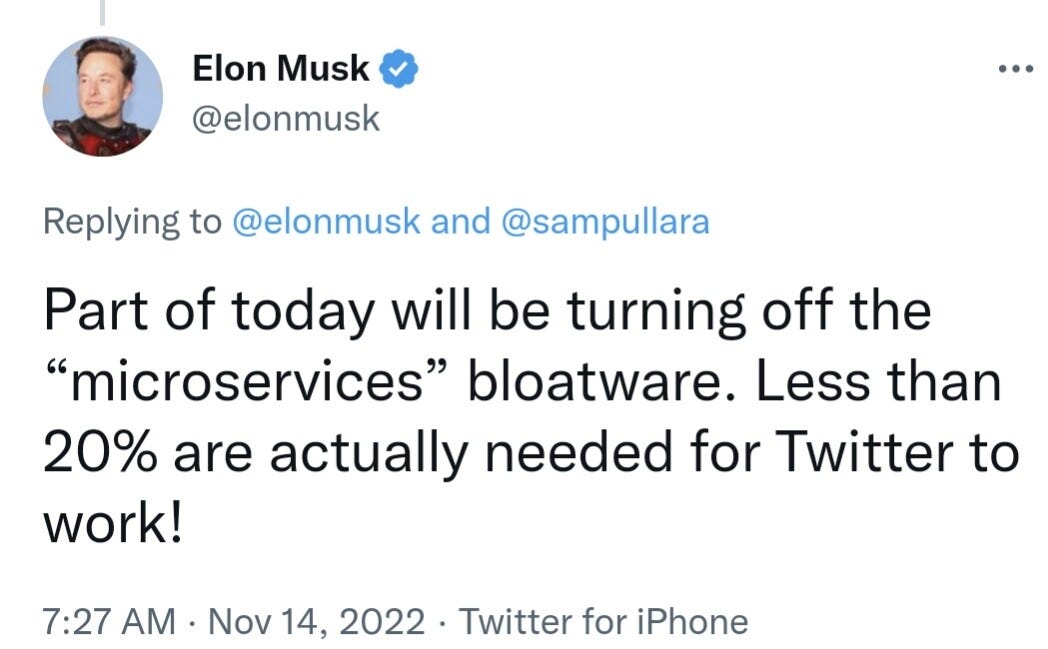

While it’s unclear how Twitter actually managed to break it, it seems eminently possible that it’s linked to Elon’s dumb-ass, supposedly cost-saving war on microservices. Combine that with Musks’s decision to totally gut the Trust and Safety team early on in his takeover, and well, here we are. While it’s less clear why Twitter’s content filtering has also become so broken, I have to imagine the two are correlated.

So much for the details.

Let me turn to the likely consequences and how I feel about them, which I can summarize thusly:

Elon Musk’s hideously irresponsible actions are directly responsible for traumatizing god-knows-how many children and adults over the last few weeks, and I pray that some blood-thirsty and vengeful regulator, somewhere, will make him pay a price he actually cares about for allowing something this awful to happen.

In 2023, as fact-checking expert Kim LaCapria reminded me on Bluesky, most people have grown pretty accustomed to dealing with a relatively highly filtered, content-screened Internet. For many very young people - spared the surprise links to horrible images from Rotten.com that traumatized so many millennials - this is the only Internet they’ve ever known.

While this filtering is of course wildly imperfect and subject to failure, Twitter, Facebook, Youtube and other sites did, over the last decade, largely manage to keep the world’s most horrifying content out of most people’s feeds. (The kitten video is also circulating on TikTok, although it appears to have first appeared on Twitter).

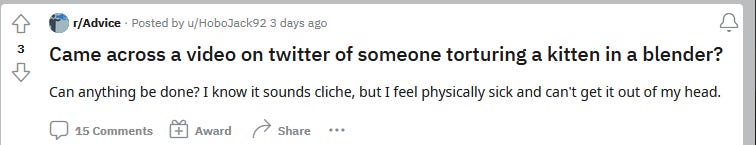

This means that when your average Twitter user types the words “Texas shooting” into the search box, they’re expecting to see news stories and comments about the tragedy. They are not expecting to be quickly confronted with imagery of the bodies of the dead - imagery which often now evades Twitter’s content filters, and, even worse, autoplays. And when they type “kittens” into the search box, they’re not expecting to see videos of animal abuse - like this poor Reddit user, who saw it on autoplay, and now can’t get the image out of their head.

Today, only fools and the ignorant believe that looking at nightmarishly graphic imagery on the Internet is no big deal. We know the hideously poorly paid people who moderate content for sites like Facebook are at very high risk of developing PTSD. We also have evidence that, for black social media users, repeated exposure to online video of the police shootings of other black people can lead to serious mental health consequences.

Although we have less clear evidence on what viewing ultra-violent, real-world imagery online does to children (which is not surprising, considering that it’d be very hard, if not impossible, to study), it’s hard to imagine the impact is good.

And kids are seeing it.

In a quote in Ben Collin’s recent NBC story, a woman in London reported that her 11 year old son had heard about it from other kids at school. A teacher on Reddit says she had a middle school student unexpectedly shove the video into her face, and another teacher on Bluesky said that kids in his 6th grade classroom were talking about seeing it.

Some people argue the only antidote to American mass-shootings is forcing the public to confront the grisly reality of what they look like. Even if we grant that that’s true (and the jury is very much out on the actual effectiveness of such a move), sharing grisly images for that purpose would absolutely need to be done in a thoughtful, careful way, with the full consent of the families of the victims. Those images should not be casually spread by blue checkmark idiots all over a shambling, dying social media network so Elon Musk can make even more money. And where they will, inevitably, traumatize people.

While some professionals do look at graphic imagery online on a regular basis and come out relatively unaffected, doing this requires a combination of training, experience, perspective, and (most importantly) consent.

I know this is true because it’s what I do. I look at very graphic and disturbing stuff on the Internet as part of my research work on the war in Ukraine - something that I willingly choose to do, could stop doing at any time, and have the requisite skills needed to do in a safe way.

None of this applies to innocent children and adults who are logging into Twitter, expecting it to function approximately the same way it always has, and being confronted with much more graphic, nightmare-inducing imagery than they’re used to seeing.

While Elon Musk’s defenders will doubtless try to say the blame really lies in the sickos who posted that content in the first place, it’s a dumb argument, because it ignores the very obvious fact that Internet sickos who prey on the innocent are a depressing fact of life, as inevitable as the tides.

If you run a social media platform, it is absolutely ethically - and usually, legally - incumbent upon you to protect the nice normal people who use your site from the creeps who want to harm them. Elon Musk hasn’t just failed to live up to this responsibility, he’s actively laughed in the faces of everyone who’s tried to hold him to it. And then sent automated poop emoji email responses to the people who’ve tried to contact Twitter to complain.

Indeed, Elon Musks’ idiot war on microservices he barely understands will, almost certainly, lead directly to more than a few people suffering the mental health consequences, being stuck with images they can’t get out of their head for life.

German regulators apparently are set to fine Twitter for failing to remove illegal hate speech, and personally, I hope US regulators and others find ways to yank back the chain on Elon’s brain-dead reign of Internet terror sometime soon. Unfortunately, I’m not feeling very optimistic about regulators doing something about that rat-bastard emerald-thief either, although I’d love to be pleasantly surprised.

I also hope Twitter’s insanely irresponsible failures to engage in basic moderation chase away more advertisers. Fortune 500 companies, famously, aren’t thrilled about their ads running next to offensive content - and indeed, someone I know reported that he was served a McDonalds ad right next to graphic imagery of the Dallas victims.

While incoming Twitter CEO Linda Yaccarino may have a wealth of experience in the advertising business, even her background won’t help if Twitter continues to be a place where advertisers can expect their content to rub shoulders with imagery of tortured pets. (I wouldn’t be surprised if she makes the classic right-wing play and tries to ban porn, instead of focusing on the much-more-dangerous problems of gore and open bigotry).

Nor do I think that Twitter’s pivot to image-board levels of irresponsible moderation will be particularly attractive to users. Me, and a lot of people I know, are already having a much better time on Bluesky than we have in years: we’re not likely to be encouraged to return by the prospect of seeing even MORE things on Twitter that will haunt our dreams forever.

I also can’t imagine that the new wave of first-time Twitter users that Tucker Carlson will inevitably attract are going to love it either: current moderation practices mean that a lot of Mee-Maws and Pee-Paws are going to be confronted with both hideous violence and hardcore porn involving dragons.

As for you, reader, here’s my advice.

Stop using Twitter if you possibly can do so. (Bluesky is pretty great so far!).

Do everything you can to keep kids off of Twitter.

Warn your friends, family, and colleagues that Twitter has suddenly become a lot more dangerous than it used to be, and that they are very likely to see something disturbing if they do use it.

Reduce exposure, if you absolutely must use it. And turn off autoplay, while turning on sensitive content warnings. This won’t protect you from all the horrors that modern Twitter features today, but it will help. I’m still there, for the time being, for my Ukraine War research - but I am getting in and getting out as fast as possible. And I eagerly await the day when Bluesky starts supporting video, so I can flee entirely.

Read resources on maintaining mental hygiene and dealing with horrifying imagery and vicarious trauma on the Internet. Even if you’re not directly exposed to these things, it’s useful information to know. Bellingcat is a great resource.

And finally, let’s all keep working hard on making sure everyone around us knows that Elon Musk isn’t some great genius, and that he’s not a visionary leader: he’s a piece of shit that can’t be bothered to do the absolute minimum required to avoid traumatizing children.